In this, the final post in my series looking at how the new SAP Information Design Tool (IDT) can support the delivery of Agile Analytics, I’m going to be reviewing the capacity of the IDT to cope with adaptive design processes. As in the previous two posts – covering Collaborative Working and Testing – I’m taking my lead on Agile Analytics from Ken Collier’s excellent, recent book on the topic. In “Agile Analytics”, Collier devotes the first of his chapters on Technical Delivery Methods to the concepts behind evolving, excellent design. It’s a superb guide through the benefits, challenges, approaches and practical design examples to delivery through an adaptive design process and I’m not going to attempt to capture it all here. In a nutshell, though, Collier argues that “Agility benefits from adaptive design” and stresses that this is not a replacement methodology for your existing DW/BI design techniques but rather a state of mind approach which uses those techniques to deliver more value, faster.

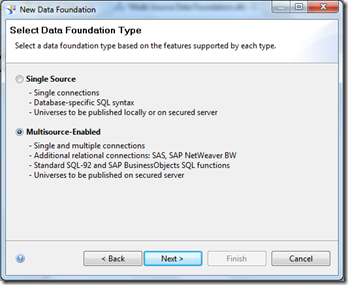

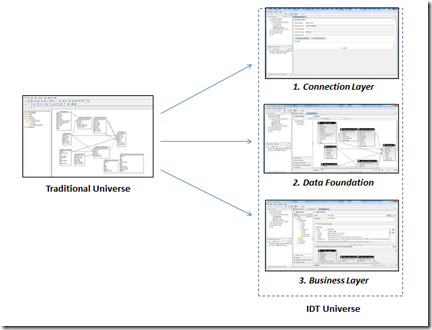

SAP’s IDT primarily supports this adaptive design approach by facilitating a more flexible approach to adapt the data access layer (the Universe) to the necessary database refactoring involved in developing your DW solutions. Essentially it does this by it’s much famed ability to deliver multi-source Universes, something simply not possible in the traditional Universe Designer.

Let’s look at this using an example development requirement. Our fearless, collaborative developers from the last two blogs – Tom and Huck – are into the next iteration of their agile development cycle. One of the user stories they have to satisfy is to bring in a new ‘Cost of Sales’ measure. This is required to be made available in the existing eFashion Universe, with daily updates of the figure in the underlying warehouse. At present users calculate the figure weekly using manual techniques. Development tasks accepted for this iteration include developing the ETL to implement the measure in Warehouse and also amendments to the Universe to expose it as well as adding it to key reports. There’s only four weeks to the iteration so they want to make effective use of all developers time throughout. Tom is looking after ETL whilst Huck is responsible for the Universe and Report changes.

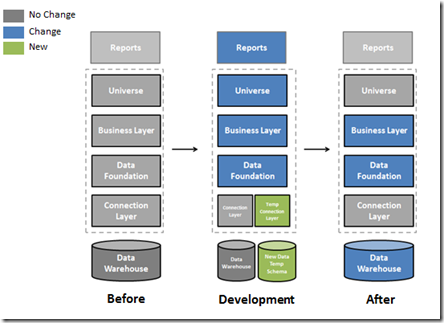

Tom gets on with the ETL work but has some calculation specs to work out first so can’t deliver a new ‘Cost of Sales’ field in the Warehouse for Huck to use in the Universe for at least two weeks. This is awkward as Huck wants to get on with working with the users on report specifications but knows it will be difficult without real data. Huck decides to take advantage of the IDT’s capability to federate data sources together. He’ll create a temporary database on a separate development schema in which he will load the weekly figures currently prepared by the business. He will then be able to integrate this into the existing IDT Project to allow the existing Universe to have the ‘Cost of Sales’ measure object added. Against this he can work with the users to define and approve reports using real, weekly, data. Once Tom has finished defining the new ETL and ‘Cost of Sales’ exists in the Warehouse, Huck can then rebuild the Data Foundation and Business Layer elements of the IDT Project to point to this new, daily source. The work done to the Universe and Reports will remain but now fed, again, by the single source. All that remains is for the users to confirm they are happy with the daily figures in the reports.

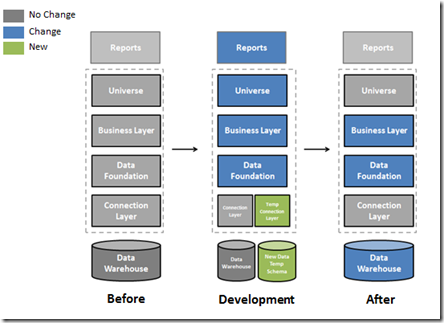

The diagram below illustrates this development plan in three stages, showing what elements of the existing environment are changed and added at each.

You can see that, with the IDT, we have a greater degree of flexibility – agility – in how Universes can be developed in the old Universe Designer. Yes, we could change sources there but it was not terribly straightforward and there was no possibility of federating sources. That would have been a job for SAP Data Federator pre-4.0 but now, with the IDT, some of that Data Federator technology has been integrated into the IDT to allow it’s use in the Universe development process.

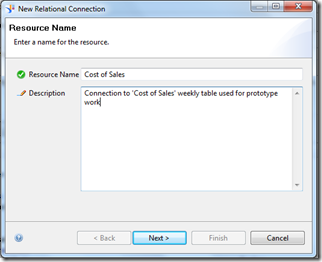

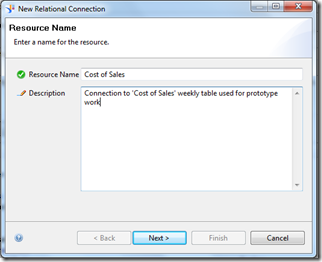

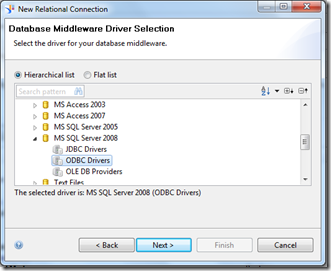

Let’s look in detail at Huck’s development activities. First up, after syncing and locking the latest version of the project from the central repository, he creates a new Connection Layer using the IDT interface. This is the layer that will connect to his new, temporary, ‘Cost of Sales’ data source. There are two types of connection possible in the IDT – Relational and OLAP.

The screenshot below shows the first details to be entered for a new Relational Connection.

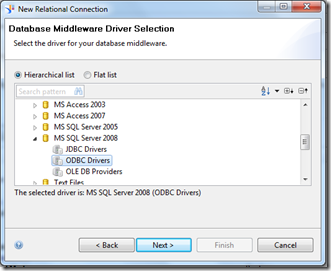

After that Huck selects the type of database he’s connecting to. He’s using SQL Server so has to make sure that he has the ODBC connection to this environment in his sandpit environment as well as in the server which the connection will eventually used when shared. Actually I think I’d always try to define the connection first on that shared server before then sharing the connection layer with the central repository for local developers to sync with.

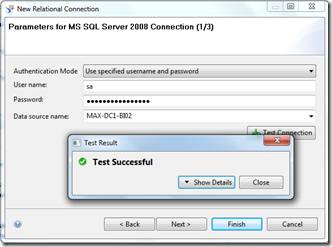

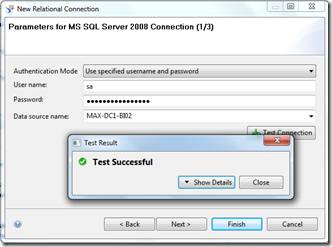

Next up Huck supplies the connection details for the database he’s set up for the prototype Cost of Sales data.

There are then a couple of parameter and initiation string style screens to fine tune (in these Big Data times, some of the pre-sets look quite quaint – presumably they’re upped when you set up a HANA connection). Once those are done the new Connection Layer is available in the local IDT Project.

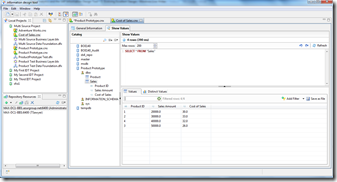

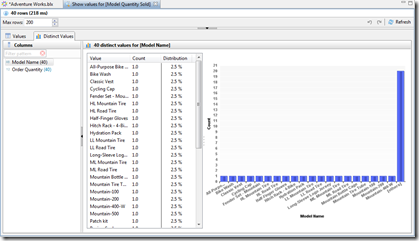

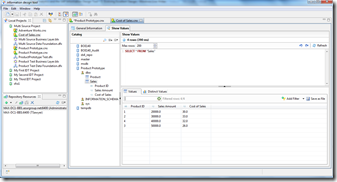

Within the Connection Layer it’s then possible to do some basic schema browsing and data profiling tasks. Useful just to confirm you’re in the right place.

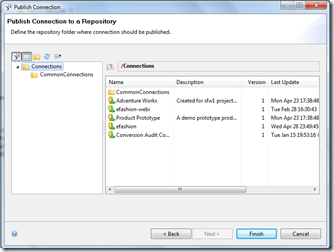

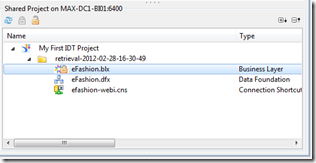

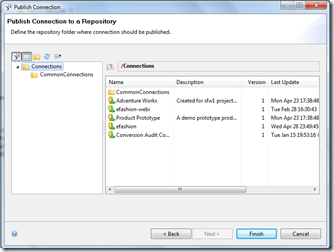

It’s important then to share the connection with the central repository. To do this, right click on your local connection layer in the IDT project pane and select ‘Publish Connection to Repository’.

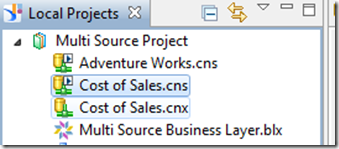

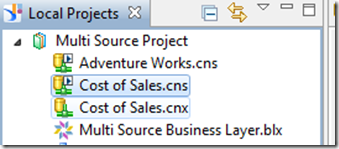

This turns your local .cnx to a shared, secured .cns which you can then create a shortcut to in your local project folder.

You can only use secured .cns connections in a multi-source Data Foundation. It is not possible to add a .cnx to the definition of a multi source Data Foundation.

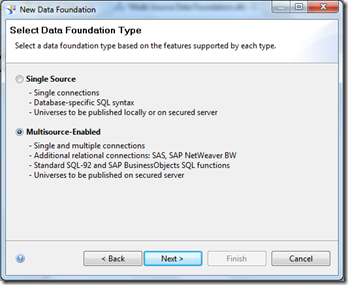

On that note, it seems a likely best practice when using the IDT to always create a new Data Foundation as a multi source layer rather than single source unless you are very certain that you will never have need to add extra sources. Fortunately for Huck he had done just that in the previous iteration when first creating the Data Foundation.

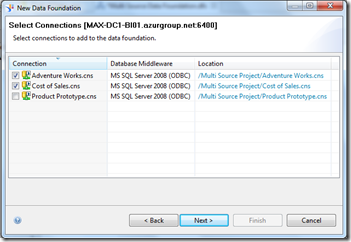

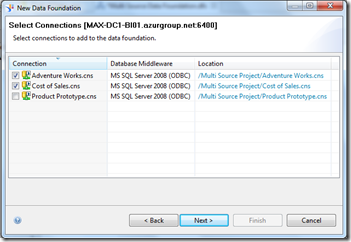

Within a multi source enabled Data Foundation it is always possible to add a new connection through the IDT interface. The available sources are displayed for the developer to select.

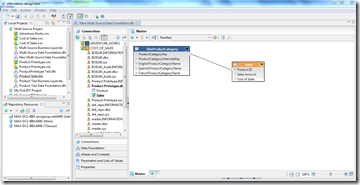

For each source added to the Data Foundation, it is necessary to define properties by which they can be distinguished. I particularly like the use of colour here which should keep things clear (although I wonder how quickly complexity could creep in when folk start using the IDT Families?).

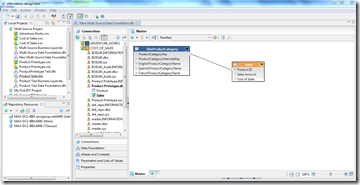

With all the required connections added to the Data Foundation, Huck can then start creating the joins between tables using the GUI in a very similar way to the development techniques he was used to in the classic Business Objects Universe Designer.

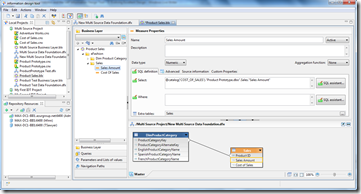

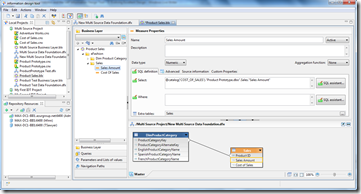

With the Data Foundation layer amended (and I’d normally expect it would be somewhat more complex than the two table structure shown above!), Huck can now move on to refresh his existing Business Layer against it. This is simply a matter of opening the existing Business Layer and bringing in the required columns from the new data source as required. Again, it’s very similar to the classic Universe Designer work where you define the SQL to be generated, build dimensions and measures, etc… for the end user access layer.

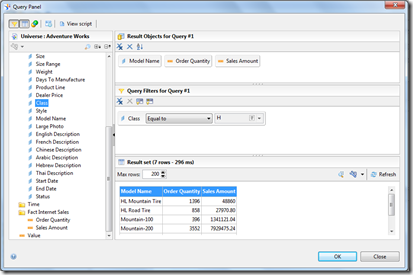

As discussed in the previous post it’s possible to build your own queries in this Business Layer interface. In that previous post I’d suggested using queries as a way of managing consistent test scenarios. Here I’d suggest that the queries can be used as the first point on validating that the joins made between sources are correct and return the expected results.

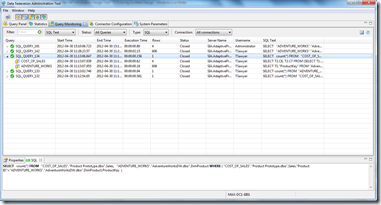

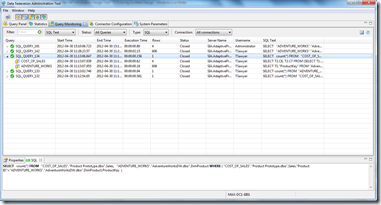

For a closer look at the queries being generated by the federated data foundation here I’d recommend looking at the Data Federation Administration Tool. It’s a handy little tool deserving of a blog post in itself really but, for now, I’ll just comment that it’s a core diagnostic tool installed alongside the other SAP BusinessObjects 4.0 Server and Client tools and allows developers to analyse the specific queries run, monitor performance, script test queries and adjust connection parameters. I’ve found it especially useful when looking at the queries from each source used and then the federated query across all of them.

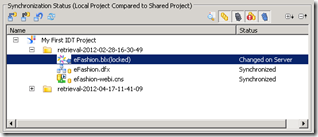

With the Business Layer now completed, it’s time to synchronise the IDT project with the central repository and publish the Universe. Publishing the Universe is achieved through right clicking on the Business Layer in the IDT pane and selecting ‘Publish’.

Huck now starts working with the users to build up reports against the published Universe. This allows them to confirm that their requirements can be met by the BI solution even whilst the ETL is being developed by Tom.

When Tom has completed his ETL work, he can then let Huck know that the new Data Warehouse table is ready to be included in the IDT. Huck then has to bring this new table into the Data Foundation and expose it through the Business Layer. When the new ETL created table is added to the Data Foundation, Huck has two options. He can simply remap the existing Cost of Sales column in the Business Layer to the new table or he could create a new Cost of Sales column from the new source. The former will allow for a seamless update of existing reports – after the new Universe is published, they’ll just be refreshed against the new table – whilst the latter will allow the Users to compare the old and new figures but would require additional work to rebuild the reports to fully incorporate the new, frequently updated Cost of Sales figures from the Warehouse.

Whichever option is selected though, the IDT clearly allows for a more rapid, agile delivery iteration than traditional Universe development offers. It’s only part of the BI/DW environment - arguably a small part – but it does bring flexibility to development, hastening delivery, where before there was little. Developers and users can work together, in parallel, throughout the iteration, each delivering value to the project. The old waterfall approach of building Data Warehouse, Universe and, finally, Reports in strict order is no longer necessary. IDT has supported a new value-driven delivery approach.

Hopefully, you’ve found this series of posts about Agile BI and the IDT of interest.